One of problem areas, which the Sector Skills Alliance intends to answer is related to the advanced productions processes involving automated robotics.

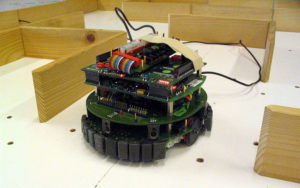

Industrial robots, in fact, are becoming smarter, faster and cheaper and are called upon to go beyond traditional repetitive, onerous or even dangerous tasks such as welding and materials handling. They are taking on more capabilities that are human and traits such as sensing, dexterity, memory, trainability, and object recognition.

However most robots of today are still nearly deaf and blind. Sensors can provide some limited feedback to the robot so it can do its job. Compared to the senses and abilities of even the simplest living things, robots have a very long way to go.

However most robots of today are still nearly deaf and blind. Sensors can provide some limited feedback to the robot so it can do its job. Compared to the senses and abilities of even the simplest living things, robots have a very long way to go.

For that reason, one of the trends of research in robotics is to improve the sensing capability in regard of precise motion.

Sensor should send information, in the form of electronic signals back to the controller and give the robot controller information about its surroundings or the state of the world around it.

Sight, sound, touch, taste, and smell are the kinds of information we get from our world. Robots can be designed and programmed to get specific information that is beyond what our 5 senses can tell us as for example contact, distance, light and sound level, strain, rotation, magnetism, smell, temperature, inclination, pressure or altitude.

For instance, a robot sensor might “see” in the dark, detect tiny amounts of invisible radiation or measure movement that is too small or fast for the human eye to see.

Sensors can be made simple and complex, depending on how much information needs to be stored. A switch is a simple on/off sensor used for turning the robot on and off while a human retina is a complex sensor that uses more than a hundred million photosensitive elements (rods and cones).

The visual sensing system can be based on anything from the traditional camera, sonar, and laser to the new technology radio frequency identification (RFID), which transmits radio signals to a tag on an object that emits back an identification code.

Visual sensors help robots to identify the surrounding and take appropriate action by analyzing the imported image of the immediate environment.

Touch sensory signals can be generated by the robot’s own movements and can be very useful, for example, to map a surface in hostile environment. A recent solution applies an adaptive filter robot’s logic based on an optimization algorithm that enables the robot to predict the resulting sensor signals of its internal motions. This new method improves contact detection and reduces false interpretation.

In the last years many studies addressed also the issue of using touch as a stimulus for interaction. In 2010, for example, it was built the robot seal PARO which reacts to many stimuli from human interaction, including touch.

Robots can also use accurate audio sensors. Recent solutions combine e.g. piezoelectric devices to transform force to voltage and eliminate the vibration caused by the internal noise.

Current voice activity detection (VAD) system uses the complex spectrum circle centroid (CSCC) method and a maximum signal-to-noise ratio (SNR) beamformer. The VAD system with two microphones enable the robot to locate the instructional speech by comparing the signal strengths of the two microphones.

Furthermore automated robots require a guidance system to determine the ideal path to perform its task. However, in the molecular scale, nano-robots lack such guidance system because individual molecules cannot store complex motions and programs. A way to achieve motion in such environment is to replace sensors with chemical reactions.